What is an API Gateway?

An API Gateway is the first step towards diving into a microservices architecture. It is a type of proxy server which sits in front of all our backend services and provides a unified interface to the clients.It acts as the single entryway into a system allowing multiple APIs or microservices to act cohesively and provide a uniform experience to the user.

An API gateway takes all API requests from the clients and handles some requests by just routing to appropriate clients and for some requests it aggregates the various services required to fulfill them and returns the combined response.

Why API Gateway? What benefits does it provide?

As more and more organizations are moving into the world of microservices, it becomes imperative to adapt an API management solution which takes off the workload of ensuring high availability and performs certain core functionalities.

A major benefit of using API gateways is that they allow developers to encapsulate the internal structure of an application in multiple ways, depending upon use case. Enumerating below are some of the core benefits provided by an API gateway:-

- Security Policy Enforcement – API gateways provide a centralized proxy server to manage rate limiting, bot detection, authentication, CORS, etc.

- Routing & Aggregation: Routing request to appropriate service is the core of an API gateway. Certain API endpoints may need to join data across multiple services. API gateways can perform this aggregation so that the client doesn’t not need complicated call chaining and hence reduce the number of round trips.Such aggregations help us in simplifying the client by moving the logic of calling multiple services from client to gateway layer. It also gives a breathing space to our backend services by lifting the thread management logic for assembling responses from various services off from there.

- Cross Cutting Concerns: Logging, Caching, and other cross cutting concerns such as analytics can be handled in a centralized place rather than being deployed to every microservice.

- Decoupling: If our clients need to communicate directly with many separate services, renaming or moving those services can be challenging as the client is coupled to the underlying architecture and organization. API gateways enables us to route based on path, hostname, headers, and other key information thus helping to decouple the publicly facing API endpoints from the underlying microservice architecture.

- Ability to configure Fallback: In the event of failover of one or more microservice, an API Gateway can be configured to serve fallback response, either through cache , some other service or a static response.

Solutions Available?

There are a myriad of solutions available when it comes to choosing an API Gateway. Few renowned ones include –

- Amazon API Gateway

- Azure API Management

- Apigee

- Kong

- Netflix Zuul

- Express API Gateway

In my view, the primary factors that are taken into consideration while choosing a suitable API gateway are the following:-

- Deployment complexity – how easy or difficult is to deploy and maintain the gateway service itself

- Open Source vs proprietary – are extension plugins available readily? Is the free tier scalable as per your required traffic?

- On premise or cloud hosted – On-premise can add additional time to plan the deployment and maintain. However, cloud hosted solutions can add a bit of latency due to the extra hop and can reduce availability of your service if the vendor goes down.

- Community support – is there a considerable community using/following your solution where problems can be discussed.

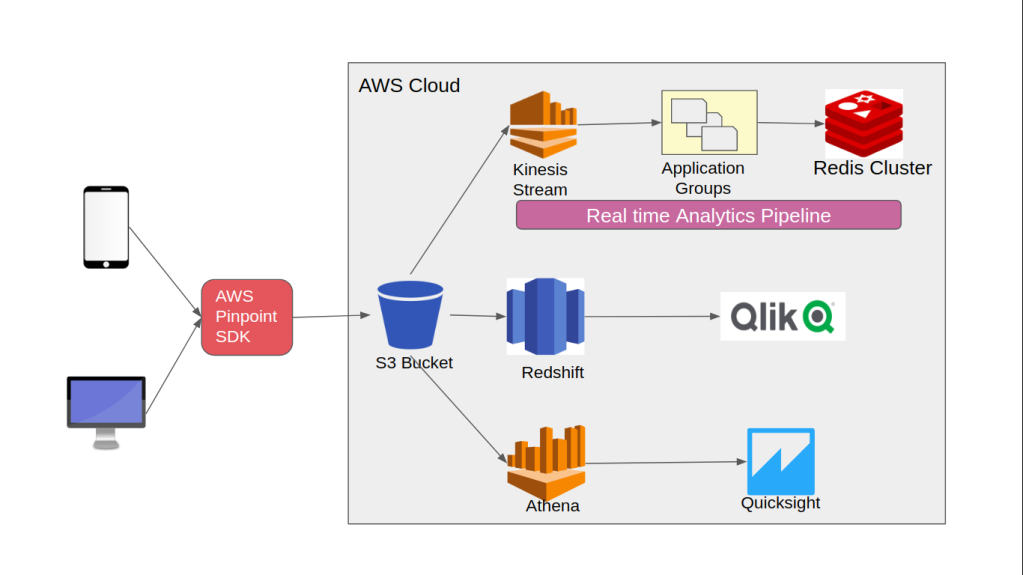

How did HK leverage API gateway?

At HealthKart we chose Netflix Zuul API Gateway (Edge Service) as the front door for our microservices. We have embedded our authentication & security validation at the gateway layer to avoid replication on multiple services. We use it for dynamically routing requests to different backend clusters as needed.

Also, we have implemented routing rules and done filter implementation. Say we want to append a special tag into the request header before it reaches the internal microservices, we can do it at this layer.

Netflix Zuul – What & How?

At a high level view, Zuul 2.0 is a Netty server that runs pre-filters (inbound filters), then proxies the request using a Netty client and then returns the response after running post-filters (outbound filters). The filters are where the core of the business logic happens for Zuul. They have the power to do a very large range of actions and can run at different parts of the request-response lifecycle.

Zuul works in conjunction with Netflix Eureka service. Eureka is a REST based service that is primarily used in the AWS cloud for locating services for the purpose of load balancing and failover of middle-tier servers. Zuul doesn’t generally maintain hard coded network locations (host names and port numbers) of backend microservices. Instead, it interacts with a service registry and dynamically obtains the target network locations.

To get this to working on our Edge microservice, spring boot has provided excellent in-build support , we just had to enable few configurations. Code snippet for the same is illustrated below:-

@SpringBootApplication

@EnableZuulProxy

@EnableDiscoveryClient

@EnableEurekaServer

@EnableFeignClients

public class GatewayServiceApplication {

public static void main(String[] args) {

SpringApplication.run(GatewayServiceApplication.class, args);

}

}

At the respective microservice layer, we needed to integrate service discovery so that as soon as the microservice is up – it registers itself with the Eureka server registry. @EnableDiscoveryClient annotation in spring boot helps us achieve this.

The following properties at the client side helped us in enabling client registry:-

eureka.instance.hostname= xxx

eureka.client.region= default

eureka.client.registryFetchIntervalSeconds= 5

eureka.client.serviceUrl.defaultZone= xxxx

Conclusion

An API Gateway service is a great add-on to have in the micro-services architecture and has definitely proved to be a boon for us. We have still not leveraged it to its maximum capacity and aim to use it for cross cutting concerns like logging, caching, etc in coming months. The end goal would be to have each and every microservice to be on-boarded on this API gateway to enable seamless communication between client to server and server to server.